Beyond the Basics: How to Answer 'System Design' Questions in Data Engineering

- Tejas Agrawal

- Dec 3, 2025

- 5 min read

Updated: Dec 6, 2025

Question 1: Handling "SCD Type 2" for Patient Demographics

Scenario: Patient data, such as addresses and insurance details, changes over time. We need to maintain a full history of these changes for audit .

purposes.Question: How would you design the schema and ETL process to handle Slowly Changing Dimensions (SCD) Type 2 for a patient dimension table?

Answer:

Schema Design: I would add three columns to the patient dimension table: start_date, end_date, and is_current.

ETL Process: When a record comes in from the source:

Check if the patient ID already exists.

If it exists and data has changed, update the existing record's end_date to the current timestamp and set is_current to False.

Insert a new record with the new data, set start_date to the current timestamp, end_date to NULL (or a future date), and is_current to True.

Impact: This allows analysts to query the patient's state at any specific point in time for historical reporting.

Question 2: HIPAA-Compliant Data Masking

Scenario: Your team is moving data from a secure production database to a lower-environment (Dev/QA) for testing. This environment is accessible by external developers.Question: How do you ensure HIPAA compliance during this migration? What specific techniques would you use?

Answer:

Approach: We must implement data masking or obfuscation before the data lands in the lower environment. PII (Personally Identifiable Information) like names, SSNs, and phone numbers must be hidden.

Techniques:

Tokenization: Replace sensitive values with unique, random tokens (e.g., replace "John Doe" with "PATIENT_XYZ_123").

Hashing: Use a one-way hash function for fields that need to be joined but not read (e.g., hashing an email address).

Generalization: Reduce the precision of data (e.g., converting a date of birth 1990-05-12 to just the year 1990 or age range 30-35).

Question 3: Incremental Loading with Missing Timestamps

Scenario: You need to ingest data from a legacy laboratory system. The system does not have a "LastModifiedDate" or "CreatedAt" timestamp column.

Question: How do you identify and load only the new or changed records (incremental load) without scanning the entire dataset every day?

Answer:

Solution: I would implement a "CDC (Change Data Capture)" approach using checksums or hashing.

Hash Calculation: Create a hash (e.g., MD5 or SHA256) of the concatenation of all relevant columns for every row in the source and the target.

Comparison: Compare the source hash with the target hash.

New Hash: Insert record.

Changed Hash: Update record.

Same Hash: Ignore.

Tooling: This can be efficiently done using Spark or DBT by performing a FULL OUTER JOIN on the primary key and comparing hash values.

Question 4: Optimizing "Fact Table" Queries

Scenario: The "Claims" table has grown to 500 million rows. Analysts are complaining that queries joining "Claims" and "Doctors" are taking too long.

Question: What partitioning and indexing strategies would you apply to improve performance in a cloud data warehouse (like Snowflake or Redshift)?

Answer:

Partitioning: I would partition the "Claims" table by a high-cardinality, frequently filtered column, such as claim_date or admission_date. This allows the query engine to "prune" (skip) unnecessary partitions.

Clustering/Sorting: I would apply clustering keys (Snowflake) or sort keys (Redshift) on columns frequently used in JOINs, such as doctor_id or patient_id. This physically co-locates related data, reducing I/O during joins.

Materialized Views: For common aggregations (e.g., "Total claims per doctor per month"), I would create a pre-computed materialized view.

Question 5: Handling Late-Arriving Data

Scenario: Sometimes lab results from external partners arrive 3-4 days after the patient is discharged. Your daily report runs every midnight.

Question: How does your pipeline handle this "late-arriving data" to ensure the reports are accurate?

Answer:

Architecture: I would implement an "Upsert" (Update/Insert) logic rather than a simple "Append."

Logic:

The pipeline identifies records based on a unique lab_test_id.

If the lab_test_id already exists in the warehouse (from a previous partial load), we update it.

Crucially, the report queries must be dynamic or re-runnable. We can use a watermark approach where we process data based on arrival_time, not just event_time.

Reprocessing: If the business requires "strictly accurate" daily snapshots, we might need to implement a "restatement" process where we re-calculate metrics for the past 7 days every night to catch these stragglers.

Question 6: Choosing File Formats for a Data Lake

Scenario: You are building a Data Lake on S3 to store raw HL7 (healthcare) messages and processed analytical data.Question: Which file formats would you choose for the "Raw Zone" vs. the "Curated Zone" and why?

Answer:

Raw Zone (Landing): I would keep the original format (JSON or XML for HL7) or use Avro. Avro is row-based and stores the schema with the data, making it excellent for write-heavy operations and handling schema evolution (common in raw data).

Curated Zone (Analytics): I would convert data to Parquet or Delta Lake format. Parquet is columnar, which is highly optimized for read-heavy analytical queries (aggregations) because it allows the engine to read only the specific columns needed.

Question 7: Designing for High Availability

Scenario: Your ETL jobs run on a self-hosted Airflow instance. During a critical reporting period, the server crashes, and jobs fail.Question: How would you re-architect this setup to ensure high availability (HA)?

Answer:

Decoupling: Move away from a single server. Use a managed service like Amazon MWAA (Managed Workflows for Apache Airflow) or set up a multi-node Airflow cluster with the Celery Executor or Kubernetes Executor.

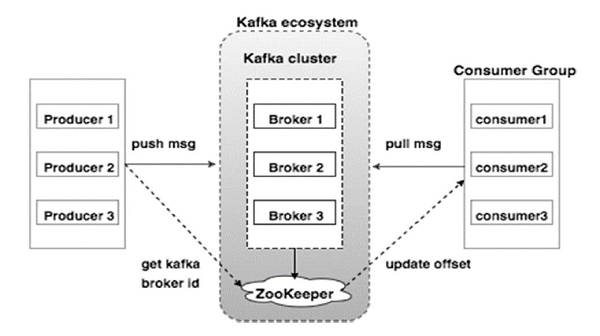

Queue Separation: Use an external message queue (like Redis or RabbitMQ) and a separate database (Postgres) for metadata. If one worker node fails, the scheduler puts the task back in the queue for another worker to pick up.

Database: Ensure the backend database (Postgres/MySQL) uses a primary-standby replication setup for failover.

Question 8: Data Quality Framework

Scenario: A doctor notices that some patients have a "Date of Birth" in the future. This erodes trust in the data.Question: Where and how would you implement automated checks to prevent this?

Answer:

Location: Implement checks at the "Transformation" stage, before loading into the final warehouse.

Tooling: Use a tool like Great Expectations or dbt tests.

Implementation:

Schema validation: Ensure dob is a valid date format.

Business Logic Check: Add a rule dob <= current_date().

Action: If a record fails, route it to a "quarantine" or "error" table for manual review instead of failing the entire pipeline. Alert the data engineering team via Slack/Email immediately.

Question 9: Handling Semi-Structured FHIR Data

Scenario: The hospital is adopting the FHIR (Fast Healthcare Interoperability Resources) standard, which uses complex nested JSON structures.

Question: How do you flatten this data for use in a relational SQL data warehouse?

Answer:

Approach: Use a processing engine like Spark or Snowflake's native JSON support.

Process:

Ingest the raw JSON into a VARIANT column (in Snowflake) or a raw string column.

Use LATERAL FLATTEN (Snowflake) or explode() (Spark) functions to un-nest arrays (e.g., a patient having multiple phone numbers or addresses).

Extract specific keys into their own columns.

Create a relational schema where the main patient info is in one table, and the "one-to-many" attributes (like addresses) are in child tables linked by patient_id.

Question 10: Dependency Management in Airflow

Scenario: You have three DAGs: DAG_A (ingests EMR data), DAG_B (ingests Billing data), and DAG_C (joins them for a Revenue Report). DAG_C must only run after both A and B have successfully finished.Question: How do you configure this dependency in Airflow?

Answer:

Option 1 (ExternalTaskSensor): In DAG_C, add an ExternalTaskSensor task that polls the status of DAG_A and DAG_B. DAG_C will wait until the sensor detects "Success".

Option 2 (Datasets/Data-Aware Scheduling): In newer Airflow versions (2.4+), define DAG_A and DAG_B to produce a "Dataset" (e.g., an S3 path). Set DAG_C to be scheduled on that dataset update (schedule=[dataset_a, dataset_b]). This is cleaner and event-driven.

Comments